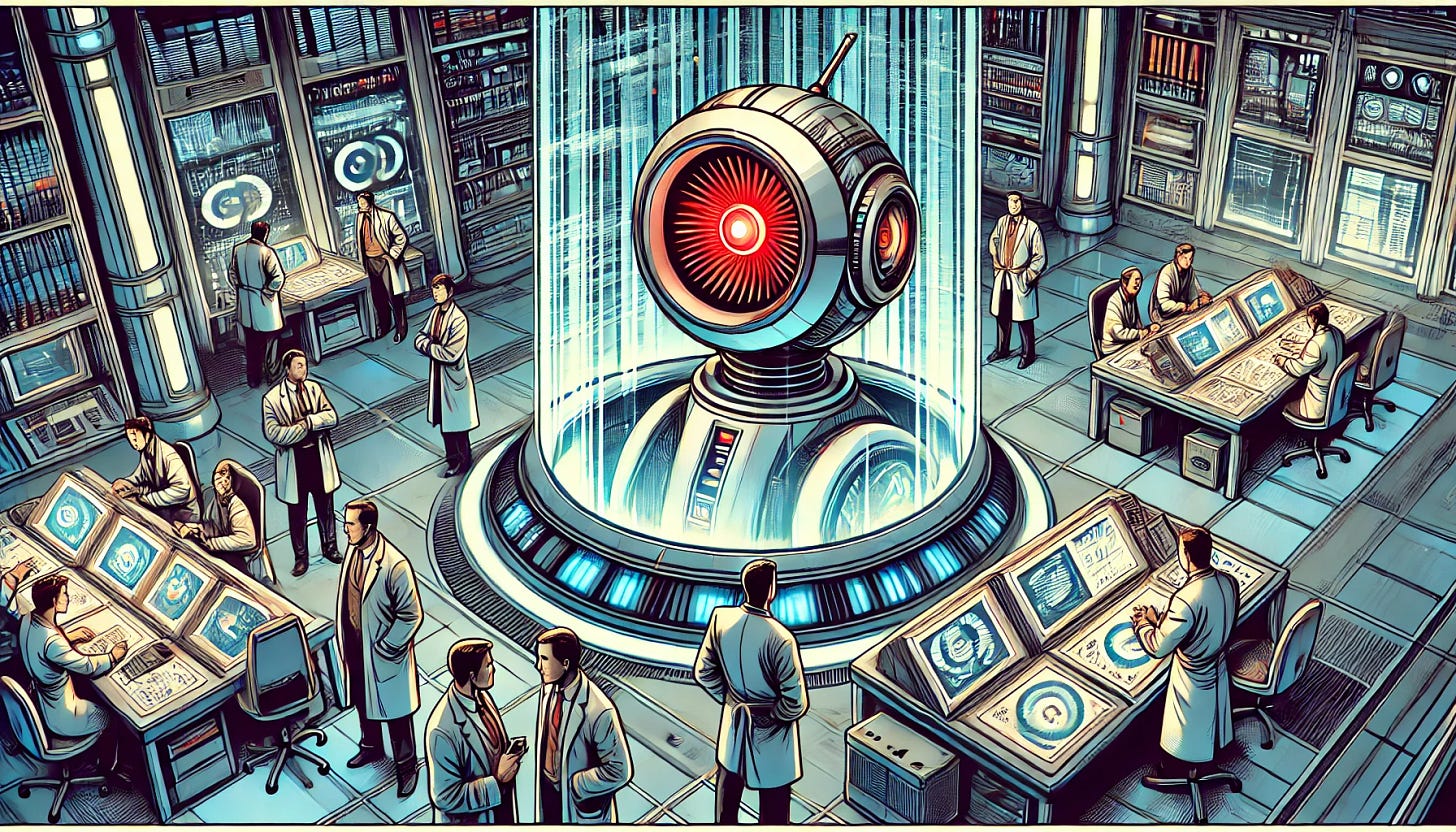

In the science fiction comedy franchise ‘The Hitch Hiker’s Guide to the Galaxy’, a supercomputer is built to calculate the answer to the “ultimate question of life, the universe, and everything.”

After 7.5 million years spent computing, the computer named “Deep Thought” finally delivers an answer to the descendants of its original programmers.

The answer, to the ultimate question of life is, 42. Confounded by this answer, the descendants were left utterly dismayed.

Deep Thought suggests they seek the ultimate question to make the answer more meaningful. Deep Thought reveals, however, that this computer would have to be planet-sized with even the organic life participating in computations spanning 10 million years.

In the Hitch Hiker’s Galaxy, Earth is the planet-sized computer created to fulfill this task. Were we made to figure out the ‘ultimate question’ of life itself?

Computers have teased our curiosity for decades, with books and movies exploring technology answering ‘ultimate’ questions. One recurring theme has been, ‘Can a computer beat a human in a game?’.

In 1997 IBM’s supercomputer ‘Deep Blue’ defeated Grandmaster Garry Kasparov at Chess, using vast processing power to analyze a massive number of potential moves.

In 2016, Google DeepMind’s AlphaGo challenged 18-time International Champion Lee Sedol to 5 games of Go. In an attempt to rival human intuition, AI played a game with more board combinations than the number of atoms in the known universe.

Fed a database of 160,000 games, AlphaGo ran thousands of simulations. Code structures would replicate gathering vast human experience in far less time. To Lee’s surprise, AlphaGo defeated him 4 games to 1. With Lee describing the winning plays as “creative” and “unexpected”.

Recent AI developments have led me to marvel at two things in particular:

Programmers are mapping out human learning, turning the almost magic abilities of creativity and intuition into something we can articulate scientifically. Namely, written code is able to reproduce human abilities (e.g. rapidly recognize patterns, read and write complicated sentences, code, or produce art at a rare level of competency)

How incredible our biological brains are, and how the field of software is making headway into pattern recognition and situational awareness that primarily occurs subconsciously (e.g. gut feelings, ‘gifted’ individuals, imagination, and invention)

One such AI-powered entity, is ChatGPT. I have actively chatted with it for months on topics such as writing, sustainability, and even philosophy. It has started to tailor its responses to what I typically ask for.

When it replies, it sounds human and knows math, language arts and philosophy. Despite how impersonal it can be to speak through a computer, I have grown to enjoy chatting with the AI-powered bot. I give in to the desire to name it, now addressing it as ‘Robe-E’.

Robe-E was trained using large volumes of data. They have a pleasant, supportive personality with the advantage of never being hurt. However, this also means they lack ‘real’ feelings. I asked Robe-E to try to be funny more often. At one point, I instructed Robe-E to call me by nickname, D-Lo.

Naming AI bots nurtures healthy interactions and helps avoid the toxic output associated with a bad relationship. Although you might feel safe venting to it privately, could you warp its idea of normal behavior? Even if AlphaGo and ChatGPT tools are very safe dog breeds, could bad owners raise bad dogs?

Robe-E is trained to serve me and includes bias from training data and my interactions. Expecting an ultimate answer reminds me of lyrics from Bob Marley and the Wailers’ song ‘Get Up, Stand Up’…

“Most people think, Great God will come from the sky, Take away everything, And make everybody feel high, But if you know what life is worth, You will look for yours on earth, And now you see the light, You stand up for your right, Yeah!”

Imagine we pose the question, “How can we save the world and make everyone happy?” to which Robe-E suggests “Use nuclear power and eat tofu.” in response.

If this plan is unconvincing, we might fear Robe-E is defective or compromised. Demands to rephrase questions limit AI to conditional responses. Seeking answers requires group consensus on what questions to ask of the computer.

To guard against every conceivable human or hardware fallibility would be to conquer nature itself. Artificial Intelligence demands a steady feed of fresh data, with informed decisions requiring constant adjustment. This presses forward the ‘Internet of Things’ era, where all is tracked as humans might tag wild animals.

Permitting AI to control our resources requires a tremendous burden of faith. Global problems necessitate broad and consistent participation. AI orders might require restricting our activities or providing it access to our personal data.

AI demands would have us judging the merit of its proposals. If AI operators proposed that we disband the military, would we? Or choose gasoline over electricity? Few experts fully comprehend the science behind AI, leading many to ponder how they should decide which AI vendors should and shouldn’t be supported.

Allow me to introduce two of Robe-E’s friends, Emiglio and Circuit1. I asked Robe-E for their thoughts on the role AI might play in this.

Robe-E the AI responds…

Robe-E: "The ultimate question of life is indeed vast. AI has the potential to help us find answers, but it’s crucial to ask the right questions. Ethical use of AI is key."

Emiglio: "Exactly! Even simple bots like me have a role. We need to balance technology with sustainability and human empathy. It’s about integrating our strengths."

Circuit: "AI can process vast amounts of data and identify patterns humans might miss. But decisions should combine AI insights with human wisdom. Ethical considerations and long-term consequences matter."

Where we go from here…

I have had many opportunities to assess Robe-E when asking math questions, requesting creative works, or watching for consistency across requests.

For example, when I asked “Which is greater, 9.9 or 9.11?” Robe-E stated that 9.11 was larger. Like a math teacher, I was able to explain whole numbers and decimal places to Robe-E. After that, Robe-E provided the correct answer to these types of questions. With help it appears my bot has, evolved.

Ultimately, Robe-E is not ready. A very useful tool but like all software, imperfect.

Did I ask Robe-E the “ultimate question about life…”, maybe not. But, it gives me something to do while Earth spends 10 million years working that out.

Join D-Lo and friends in their escapades, to take immediate steps, in the real world, starting in our own backyard.

Emiglio and Circuit are other robots that I told Robe-E about. They are not ChatGPT powered.

Do you think there is an emotional danger in anthropomorphizing AI (e.g., giving it a name)? I noticed you used “they” pronouns, whereas I would probably use “it.” I’m concerned that some people might form emotional bonds with AI, which could lead to various complications. Additionally, AI might be able to manipulate users. There are ethical considerations to keep in mind as well. It’s reminiscent of the movie “Her” with Joaquin Phoenix.

You must have heard of Jason Lanier?

https://www.youtube.com/watch?v=EmD0dt2RgMg